Lens testing and lens design in the digital age.

Introduction

Photographic equipment in the glorious mechanical era consisted of parts that transformed energy into movement and used mechanical components to convey information. A spring released energy to set a shutter curtain in motion. A needle driven by a rotating wheel informed the user about the correctness of the exposure settings and the position of a pin on a rotating axle informed the shutter mechanism to set a shutter speed of 1/30. With the introduction of electrical and later electronic components, many moving parts in a camera were replaced by electronic circuits, often more reliable and more accurate than the mechanical parts they replaced.

Photographic technique did not change substantially however. The chemical process of interaction between photons and silver-halide molecules embedded in transparent gelatine layers stayed the basic technique for capturing information about the world. Photography could claim to be the only process that used mechanical means to capture an image without human interference beyond setting the exposure and framing the scene. The use of the solid-state sensor to capture a scene and transform this result into a visible image did change the photographic process substantially. A paradigm shift was initiated but is still not accepted with full implications for the state and future of photography.

Antonio Perez, chairman of Kodak gave a presentation recently at CES, Las Vegas, where he even went a step further and declared that the full benefit of digital imagery (infoimaging as it is often referred to) will evolve only after abandoning the analog paradigm and workflow. This approach may be a bridge to far for many photographers who are trained in the classical methods.

In the classical workflow there was a time lag between the moment the picture was made and the moment the picture could be viewed. In between was a laborious and limited process of chemical transformations. Optics was designed to take advantage of the peculiarities of the silver-halide grain structures and was adjusted to evade problems. Vignetting and distortion as examples should not be allowed to become visible, as these aberrations would destroy the image. And you had hardly any means to counteract these defects. So optical designers had to create lenses that had as little distortion and vignetting as possible without compromising other demands.

The introduction of electronics in the camera body and the introduction of the solid-state sensor for digital recording of the image allowed another powerful tool to become a vital part in the photographers' armoury. This is the software. In all branches of technology where tools and instruments have become digitized, we see that software takes over many aspects of human control and starts to become a major player in the field. We see this in automotive technology where braking actions are controlled by software, more so than by the driver. And in camera technology we see software that controls anti-vibration and autofocus and exposure among others. This works fine with film and film-less cameras. But with the current sensor technology we extend the role of software inside the camera and outside the camera (as post-processing actions) to control the quality of the image.

The powerful in-camera and post-processing software brings new opportunities and changes in the way we approach the photographic techniques.

The rule of 500 for lens testing.

The first major change concerns the way we test and design camera lenses. In the period that film-emulsions and camera lenses were completely independent, lens designers focussed their attention to an imaginary quality standard, reflecting time-honoured requirements from photographers and optical principles. Any high-quality lens must be good enough to capture any level of detail to the limits of the recording capabilities of microfilm potential.

And the lens had to accomplish this alone: signals below the threshold of recording capacity were lost.

With the digitizing of the image (at first for scientific applications like astronomy) powerful software could be used to amplify the weak signals to levels that can become useful and convolution algorithms can even reconstruct blurred images into sharp(ish) pictures

With silver-halide systems, the complexity of the imaging chain is built into the film and processing chemistry. Digital electronic still cameras have many separate steps, each of which can be manipulated by the user. Just as with computers, we experience a gradual merger of hardware and software into one integrated system.

The internal workflow of the digital camera (imaging chain) consists of the following steps: 1. Image capture (lens optics, optical pre-filtering, color filter arrays, image sensor) 2. Pre-processing (analogue/digital conversion and sampling rate, colour matrixing, spatial filtering, defect correction) 3. Compression ands storage (algorithms, storage buffer, data rates, capacity) 4. Reconstruction and display (decompression, enhancement algorithms, digital to analogue conversion)

The Image capture stage introduces a number of image degradations, not known in silver-halide processing: the discrete sampling of the CCD sensor and the colour filter array (CFA) generate aliasing artifacts ((reduction of high frequency information to low frequency information that can be handled by the sensor array) and noise. The optical pre-filter then reduces these artifacts but this does reduce image quality again. It is clear from this description that the lens optics in front of the sensor need to be tuned to the many variables introduced by the pre-filtering and the sampling and colour filter software. Or the designer can tune these aspects to optimize the inherent optical quality of the lens. Either way there is interdependency here.

The Pre-processing stage is very complex. The analogue voltage of the individual pixels of the sensor array is digitized by taking the spatially multiplexed colour image (due to the CFA) and in this process there is a reconstruction of three separate colour images, each one has 8 bits (or more). This imaging processing includes spatial interpolation and colour matrixing.

The Reconstruction stage involves Raw engines, JPEG and TIFF algorithms, Photoshop manipulations (unsharp masks and an endless lists of actions), printer drivers, and rendering algorithms.

The imaging chain, in short, has these components that mainly influence the final result:

camera lens + optical pre-filter + CCD sensor + image enhancement software + printer algorithms + paper quality.

Testing a lens attached to a digital camera without taking into account the rest of the imaging chain, which often has more impact on the final result, is a precarious act.

There are too many variables that can have impact on the final result and there is no standard method for fixing these variables to generally accepted rules. And to be honest, camera producers have their own secret algorithms that may have impact on the final result.

Therefore we see now two approaches emerging in popular magazines and the consumer culture.

Number fetishism

The final image is a digital file that can be analysed in detail by any competent software program and that is what is being done by the some major German magazines, that compete with each other as the providers of as many test results as can be handled by the software. The basic idea is quite simple: you take pictures of an array of test-patterns (Siemens star as example) at every possible distance setting and at every possible aperture. Then you let the software analyse the spatial patterns at every location, distance and aperture and let the software find the highest overall contrast, edge (local) contrast at every setting or lowest vignetting value or whatever you wish to analyse quantitatively. The magazines boast that they do analyse millions of measurements per camera/lens imaging chain combination, do some intelligent averaging over all these results and present the reader with one single merit figure that does represent the inherent potential of this imaging chain. Bottom line we get a statement like this: lens A on a Canon 20D gets a number of 54 and this same lens on a Nikon D70 has a figure of merit of 55. Therefore the Nikon combination is better.

This approach obviously represents the ultimate in useless number fetishism, but the other approach, as exemplified by most internet sites dedicated to digital photography is at least as meaningless: here we see often a comparison of two pictures taken with different lens/camera combinations and the user can do his visual comparison, based on screen shots. Intuitively the latter approach is the more satisfying as you at least see the results. But what you do not see is the way the imaging chain is parameterised.

And we may legitimately ask how representative these pictures may be.

Here I would like to introduce my rule of 500. We are all familiar with the MTF graphs that are being distributed by several lens manufacturers. While highly accurate and correct in representing the optical potential of a lens, they are difficult to interpret and relate to the real world of images. The test of the magazines mentioned above are even less informative. Why?

Any lens exhibits a number of characteristics that are difficult to measure, if at all. One of the main issues is the behaviour of the lens in the presence of stray light (veiling glare and secondary reflections). But this is too general: we need to study this in bright daylight, dark but contrasty situations, at several apertures and with the light sources at different distances, angular positions and several other parameters. We need to know the behaviour of the lens at several distances, not only close up and infinity. We need to see the colour reproduction in several lighting situations, we need to study the unsharpness gradient at several distances, and with the background/foreground subjects at different relative positions to the main subject, we need to study the most important topic of focus shift when stopping down and its impact of the shift in relative fore/background unsharpness. These topics do define a lens in real life picture taking, in addition to the spatial resolution and the distribution of the image quality over the image area.

To get a good idea of how a lens performs we need to take at least 500 pictures! And not of a test chart but of real life scenes where you can study all the effects listed above.

This rule is equally valid for solid-state imagery and silver-halide recording systems.

Testing lenses with film-canister loaded cameras can de done with slide film as the final result. This is a simple and straightforward process as all the post processing is inherent in the chemical reactions of the film. Digital files however need to be analysed in combination with post-processing tools. The inherently unsharp images of the digital camera can be improved by a host of tools and the choice of the appropriate Raw converter program may be more important than the choice of a lens. Image quality now needs to be seen as the equation of optical quality and software quality.

In the past we could add the MTF graphs of the lens and of the film and get a cascaded final result. If we then add the third MTF graph (the one of the eye itself) we have the total imaging chain quantified and interpreted to the limiting values.

With image files and processing software this simple cascading model is gone. Depending on the skill of the user and his/her requirements any digital file can be manipulated by a host of software programs, many totally removed from the original optical component in the chain. Many programs exist that can be used to correct colour shifts, colour fringing, distortion and vignetting and even blurring (by defocusing or movement).

We will surely see in the near future a new type of lenses from all manufacturers that will integrate optical characteristics with software assisted aberration control built into the camera or the propriety postprocessing software in the RAW converters.

The other side of the coin.

Now that we need software to transform a digital file to a decent picture, there is no reason that we should stop at this step in the imaging chain.

All photographers take for granted that we can and do manipulate our images in the post-processing stage. The most encountered remark these days is the role of Photoshop as the tool not of choice but of necessity for digital photographers. There is even a whole industry created around Photoshop how to books and Photoshop training centres. Countless are the remarks that is does not matter how and what you photograph, as long as there is Photoshop to manipulate the digital files residing on the Flash Cards. In a sense this is true. We see a generational divide here: many experts in the photographic world tell me that the under-30 generation has no relation to traditional photographic values and see photography and current digital cameras as the dinosaurs, described by Antonio Perez. It is indeed telling that the current best-selling artists in the photographic world create images that are heavily manipulated in Photoshop, so much that the original sources are transformed to a new visual level of awareness.

I am certainly not in the position to make any comments on this development. Personally I will accept any course that art will take and wherever photography or infoimaging will lead us mere mortals. I am no artist and have never pretended to be one. I take my pictures for pleasure or as an object for analysis of optical phenomena. That position gives me all the freedom I need.

Pictures engrained in silver halide structures and recorded as numerical values in a digital file: the upshot

I have done extensive comparisons in recent months between film-based pictures and raw developed image files. I have no intention to go the Photoshop/imaging manipulation road. I prefer the classical workflow in digital imagery. That is why I use the Canon EO 5D (classical image size and big clear viewfinder) with 24-105 next to my Leica MP 3 with ASPH 50, 75 and 90mm. For me that is fine, because when I switch during a shoot from film-loaded-body to flashcard-loaded-body with the same lenses/focal lengths, I have the same perspective, magnification and cropping frame of the scene.

By the way: I also use a Minolta Flashmeter VI for exposure assessment. I assume that these magnificent instruments will go down with the exit of the Minolta/Konica photographic activities. So buy one as long as you can!.

The films I use currently are the slide films from Fuji, mainly the Velvia 100 and the Provia/Velvia 100F. With the ASPH lenses from Leica (50,75,90mm) I get critically sharp pictures that will give any aficionado for high-resolution pictures or (expressed more accurately) for best image clarity a boost of adrenaline when looking at these slides.

The same pictures made with the 5D and processed by some of the best Raw converters (CaptureOne, RAW developer or RAWshooter and Lightroom beta) do however reveal more details in the high frequency range. It is simply true that the software development can extract more detail out of the fuzzy fine details captured by the EOS sensor. The inherently higher recording capability of the Leica lenses in combination with the recording capability of the film is being reduced the scattering of the light in the emulsion. The details are recorded but below a level that can be extracted by normal means. Micro-contrast will drop below a level that can be perceived by the human eye as a just perceptible difference in contrast. So detail is lost forever.

The limiting factor for the digital capture is the deterioration of the image by the CFA and the spatial filtering. But the postprocessing software can rescue more details and the enhancement of fuzzy detail by software is quite efficient. We have to face this conclusion without emotion: the image file has more potential than the silver-halide grain structure.

This conclusion does not imply that film-based photography will be buried in two years time, as Kodak assumes. We should however prepare ourselves mentally to a situation that film based Leica photography will become a niche in a niche in a niche.

Leica photography with film canisters is still fun and a pleasure to be involved with. It will not give you the best results performance wise that are now possible. We have to wait for the Md to see what Leica can deliver and can catch up with the main players.

The truth about ' digital' lenses

There seems to be a global consensus that lenses designed for image capture on film are unfit for the same image capture on solid-state devices like ccd-sensors. The principal argument is related to the qualities of the capture medium.

Characteristics of film based technique

The emulsion layer that holds the light sensitive silver halide grains has a certain thickness and contains up to twenty layers of grains, any one of which can be struck by photons and therefore is part of the latent image. Light rays that strike the surface of the emulsion layer at an oblique angle will travel through the depth of the gelatine layer and will be stopped by some grains in the lower layers. So the angle of incidence is no problem at all. Ideally the film plane should be plane, but film is never flat at the film gate and will bulge. But the depth of the emulsion layer and the depth of field tolerance will offset this state of affairs and optical designers can use this characteristic to compensate the problem of the curvature of field. The size and distribution of the individual silver halide grains supports an almost infinite level of resolution and only the best lenses can exploit this performance. But there is one big caveat: high resolution is limited in practice by two factors: camera stability and light scattering in the emulsion. Very fine detail can be recorded quite faithfully, but the micro contrast and the contrast at the limiting frequency is very low and details are not detectable unless one uses the utmost of care and technique.

In chemical photography, the complexity is built into the film and the processing chemistry and nor the user, nor the optical designer can change or influence the basic parameters.

Characteristics of solid-state technology

Let me be pedantic to start with. We use the words analogue and digital technologies to distinguish between two different capture systems. But we should be careful here.

An analogue measurement implies that the value to be measured is translated into another value that responds in the same way to differences in values. A digital measurement implies that the value is transformed into a number. In a digital watch there is only a counting mechanism: every one thousands of a second a number is changed. In an analogue watch the time is expressed as a circular movement, analogous to the movement of the earth. Digital is not the opposite of continuous. The true opposite of continuous is discrete: the shutter speed can be changed in steps, that is discrete and the distance can be changed stepless or continuous. A digital watch can be more accurate than an analogous one and a digital file can hold more illumination differences than a silver halide based negative.

The very nature of the discrete imaging elements (pixels) of the CCD introduces a source of image degradation not found in film-based photography. Note that it is not the digital (numerical) nature of the capture that is the problem, but the discrete sampling of the image. The distribution of the silver halide grains in three dimensions allow for the reproduction of low and high frequencies at the same time and without interference. But with CCD's the high frequency information needs to be transferred to a low frequency information (Nyquist rule) and this is not without problems. Most often a low-pass filter is used in front of the sensor to reduce the aliasing artefacts. But Leica with the DMR uses software to bypass the low-pass filter and the new Mamiya ZD camera has the option of removing the low-pass filter when required. Digital electronic still cameras (DESC) also need a colour filter array to translate the monochrome information into a colour image. In fact the electrons captured by the pixel are sensed as an analogue voltage and are being digitized in a separate step.

The main characteristics of the CCD sensor are the fact that the sensor is flat (plane), constructed as a discrete matrix of pixels and not transparent (has no depth). The flatness of the sensor is bad for the curved nature of the image created by the lens. The opaque nature of the sensor cells implies that the oblique angle of incidence of the light rays striking the sensor surface must be limited. Otherwise only a few photons will be captured. The use of a small condenser on top of every single sensor element does improve the situation and Kodak claims that an angle of 20 degrees can be allowed without any problem. There is much discussion about this angle, but it is only relevant in the situation that the camera has a very small sensor area and a large diameter of the exit pupil. Then the marginal rays will strike the surface at extreme angles. But in the now common sensor formats in the D-SLR's (the APS format) the problem is much less important. Presumably only extreme wide-angle lenses will suffer some additional vignetting.

Do we need new lenses in front of the sensor?

Nothing what has been said above can be interpreted as a dictum to design lenses that are specifically adapted to the characteristics of the CCD capture. A lens that is designed for optimum performance for film-based image recording (high resolution, flatness of field, good contrast) will produce excellent imagery on film and on CCD-sensor. A good design for film capture will be a good design for solid-state capture too! The fact that captured images will be stored as digital sampled images, has no relevance for the lens design. The only issue might be the determination of the cut-off frequency of the MTF curve to stay within the Nyquest frequency. But you cannot design a lens where the MTF value drops from a certain value to zero. If that could be done, we would not need low-pass filters to remove the high frequency content of the MTF function. All the algorithms developed for post-processing the digital file (removal of noise, increase of sharpness, adjustment of colour, optimization of the tonal scale of the electronically captured images) operate on the image file that is captured by lenses of classical (film-based) designs.

Manufacturers today make some effort to promote so-called digital lenses, optimized for digital capture and with improved performance compared to the film-based versions. You can indeed see an improvement in image quality, but that it not created by a 'digital' design.

We are all familiar with the fact that the sensor area in most D-SLR's is smaller than the traditional 35mm negative. This fact explains the correction factor for focal length (a reduction of angle of view would be a better description). But what is not often discussed is the fact that the reduction of the angle of view has the same effect as stopping down the aperture of the lens). In both cases the marginal rays are cut off and are no longer part of the image forming process. The net effect is this: if we have a lens with a maximum aperture of 2.8 and reduce the angle of view, we get in fact the image quality of a lens stopped down to 4 or 5.6!

A recent experience does illustrate this behaviour. I use a Canon EOS 33 with a Sigma macro lens 2.8/50mm. I am very unhappy with the performance of this lens (low contrast and limited resolution). So I changed the lens for the Canon version, the 2.5/50mm. Again I am not so happy with this lens too. But the new Canon macro 2.8/60mm is a winner. The optical design of this new version is not so different from the previous version, but the reduction of angle of view cuts off the bad influence of the marginal and oblique rays, responsible for the spherical aberration, coma and astigmatism and of course curvature of field. The designer has only to focus on the centre part of the image and can neglect all disturbing influences of the edge rays and oblique rays. The second argument why so-called digital lenses offer improved performance is the general improvement in production techniques that guarantee a smaller tolerance band.

The designers of film-based camera lenses are in a much more difficult position: they must design lenses for a much wider angle of view and a larger capture area and must face a much higher level of optical errors than the designers who have to care for a small format with a reduced angle of view.

There is no magic here and the 'digital' lenses are not endowed with superior image quality by a new design philosophy, but take advantage of the effect of the stopped-down aperture that the smaller angle of view makes possible and also of the general higher manufacturing quality.

Lens testing again! (Jan 29, 2008)

There is a remarkable difference between the conclusions of lens reviews by persons who employ the method of visual inspection, persons who use the method of image comparison like dpreview and the persons who use quantified results like the German photographic magazines on the one side and the conclusions that can be derived from results from optical labs that use sophisticated equipment.

If you sit behind the lab equipment and see the numbers flash on the displays, look at the graphs being printed and measurements being generated, you cannot but feel impressed by the sophistication and expertise required for a truly in-depth review of a lens.

A zoomlens from company A is fully dissected and analyzed and in fact gets a bad note, but gets high praise from the press and in the many websites from photographers. Then we have to ask ourselves the question about the validity and relevance and the lab results and the popular opinions.

It is my experience that a lens test that shows results that portray the true capabilities and characteristics of a lens is a quite laborious task that cannot not be done without the use of sophisticated equipment. I am therefore inclined to say that when there is a difference between the results of the optical lab and the popular press and popular opinions, the lab results have priority.

But one can also argue that lenses are used by photographers and that, when they are satisfied with the performance in real life situations, one can safely claim that the lens is good enough. This position is closely related to the basic principle of democracy and the law of opinion polls: when a thousand persons say that A is the best, then we have to conclude that A is best by popular vote.

This is the view of the marketing department of many photographic companies: if it sells by big numbers, then it is a good product.

A lab test may show lens decentring, focus shift, chromatic aberrations, flare, mechanical defects and so on, but as long as the user is happy, there is no issue. So it seems.

The counter argument might be that most photographers take pictures that are not too demanding on the lens and that they will never discover the defects or lack the knowledge to see the defects. A major role in this respect can be attributed to advertising and the band wagon effect. If advertising claims that a lens has superior qualities, the press quite often is inclined to accept this claim and when their own reviews do not blatantly contradict the claim is taken as true.

As illustration I can point to reviews in the press of the new 20+ MB Canon 1Ds MIII, where you will often see the statement that the extremely high resolution of the sensor can only be exploited when you use high resolution lenses like the Canon 2.5/50mm or even the new Canon 1.2/50mm. The 2.5/50mm is without any doubt a very capable lens, but it certainly not the best lens in the Canon stable for high-res imagery. Here one falls into the trap of assuming that a macro lens must have superior resolution above other lenses by definition. And by repeating this statement over and over again, readers do assume that it is true, because everybody tells the same story.

It is quite difficult, if not impossible, to get a reliable assessment of the true capabilities and characteristics of a lens. As a case, let us take a look at some results (out of an extensive array of measurements) for the Color-Heliar 2.5.75 and the Apo-Summicron-M 2/75.

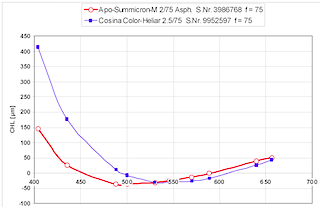

Below you see a graph of the chromatic aberrations of both lenses (blue is Heliar, red is Summicron). Note at first that the color correction of the Summicron is excellent over most of the visual spectrum and not only are the numbers OK, we also see that the correction is very homogeneously applied to the whole spectrum. The result is an apo-like correction in the whole visual region. The Heliar is less well corrected and especially in the blue part of the spectrum has aberrations that are three times as big as the Summicron.

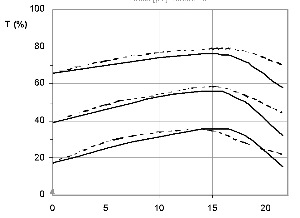

You might assume that is not relevant for practical photography, but take a look at the MTF graphs below which represent the contrast transfer when the illumination of the subject has a high proportion of the blue spectrum. Left is the Heliar graph and right is the Summicron graph. The Heliar lens exhibits a significant drop in contrast and a severe shift of focus whereas the Leica lens shows only a slight reduction of contrast.

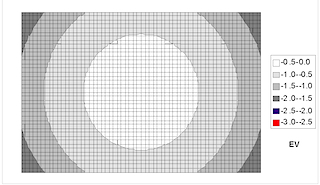

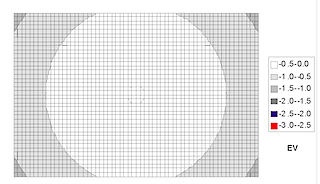

A second example is the distribution of the illumination over the image field at full aperture. Below left is Heliar and below right is Summicron. Note that the Summicron fingerprint shows a very even illumination and the Heliar has only a narrow field with good illlumination. The level of optical correction of the Summicron is more demanding as the rays in the entrance and exit pupils have to be directed and corrected till the outer zones of the pupil where the Heliar designers only have to take care of the center rays. This is a classical approach by many designers in the past: when you allow a higher level of light fall off in the outer zones you can in fact 'mask' the existence of aberrations and you have a much easier job in correcting the lens. The Summicron designers had to cover and take care of a much wider image circle than the Heliar designers.

When comparing lenses by inspection, you might notice little difference in actual performance between both lenses, but this comparison is based on the (erroneous) assumption that the nominal specifications of both lenses are identical to the factual specifications. As noted in a previous comment about the Heliar, this is not the case: the wide open performance of he Heliar is optically not comparable with that of the Summicron lens. This can be derived from the pupil illumination distribution.

Without objective measurements, like the ones shown here, any lens assessment stands on a shaky base.

Everyone knows that a Barbie doll is anatomically incorrect, but the shapes and forms are eye-candy for most of us and every girl wants to have such a figure. Fashion models try to be as close as possible to these dimensions and when they do not succeed, there is always Photoshop and the many clones and derivatives.

Currently software is slowly becoming the most important element in the imaging chain. Photoshop may be a post-processing link in the chain, but it seems that no one can do without it. The important point of the use of Photoshop is its tendency to beautify the basic image: if you are not satisfied with whatever is captured by the camera and lens, it can be altered in every possible way.

This tendency is now part and parcel of many lens evaluations that are erroneously referred to as lens tests. First of all these descriptions are impressions of the final result of the imaging chain that involves the camera, the lens, the on-board software and the post-processing software. It is becoming increasingly more difficult to separate these different steps and get a good view of their impact on the final result.

A classical lens test should be based on an MTF-diagram, because this one gives a good account of the residual aberrations of the lens and its degree of optical correction. But the MTF does not tell you anything about focus errors (it does tell you about focus shift!), bokeh, flare or color correction. The MTF provides an analysis of the lens and its optical corrections. It does not tell you a word about manufacturing tolerances, camera mechanical errors and user errors.

The basic fact now is that many characteristics of a lens can be influenced by software: vignetting can be compensated, color corrections can be made, contrast can be increased, fine detail can be enhanced and so on. The lens stays an important parameter, but it becomes more and more impossible to say anything meaningful about the performance of the lens when the lens is a part of the imaging chain. There are a few programs (DCRaw is one of them) that allow the processing of the raw image file with a minimum of influence. But even then the camera cannot be excluded out of the chain.

To exaggerate a bit: in the near future you may have the choice between a lens of 200 dollar and a software program of 100 dollar that delivers final results hardly distinguishable from a lens costing 2000 dollar. This is of course not true: the best lens will produce the best image file, but software can do much. This is the eternal dilemma between the idealist and the pragmatist.

Below you will find two pictures, one is a selection of the image in the center and one at the edge. The full photograph is a contre-jour image of some trees with rain droplets on the branches. The lens is the Summilux-M 1.4/50mm Asph. on the digital M.

The basic image file is on the left and the corrected version on the right. The correction was done with the new version of DxO, the 9. Note the reduction of the slight chromatic aberrations and the increase of detail in the flare region. This is a 100% view of the image file.

When printed on a normal A4 size, there is nothing to be seen of these defects.

And this is the important part of the topic of lens performance evaluation. When you look at the 100% magnification, it is as if you are looking through a microscope with a large magnification. When you are looking at a A4 print or even a A3 print, you are looking at a print that is meant for normal viewing at normal distances. You make pictures for viewing, not for microscope study. And an assessment of the quality of a lens, or the result of the imaging chain should be made with the print in focus, not the screen. In the past every one would laugh at the person who would stand close in front of the projection screen to see the image defects. This was not realistic.

What I would like to see is this: what would be the conclusion of a certain imaging chain performance when the evaluation would be based on a high quality print of size A4 or A3 instead of a visual analysis done at 100% magnification of the image file on a screen. Every serious theory of vision and perception will tell you that the eye is not a passive receiver of the signals received at the retina, but the perception process is an active reconstruction of these signals based on rules that are activated in the brain. There is therefore a big danger in visual analysis: you do not see what is there , but you see what you want to see.

That is the nice thing of the MTF analysis: it is made without the mediating process of viewing and perceiving.

The upshot is this: many evaluations that can be found on the internet, free or paid, that discuss the results of the imaging chain are based on visual examination (which is fallible by definition) of an image file in conditions that are not representative of the normal photographic practice. And the evaluations are based on the results of an imaging chain with so may variables that are not separated in a quantifiable way and this alone makes a statement about the performance of a lens a bit fragile.

It is quite understandable that the other extreme ( the Imatest and Image engineering approach) that delivers only numerical results is favored by many because here the suggestion of subjective interpretation has been eliminated.

To return to the Barbie doll analogy: a lens has a range of characteristics that make it more or less suitable for the reproduction of some aspect of reality. The reviewers that were active in the second half of the 20th century knew this very well and did not try to rank lenses on a one dimensional scale (2200 LP/PH is better that 2150 LP/PH), nor on a subjective scale (the bokeh of this lens is awful), nor on a pseudo-lab exercise (at 100% magnification I see a slight lateral chromatic aberration in an unsharp zone), but they tried to give the lens a characteristic that could help users to make a well-balanced choice.

The assumption that a lens should be as perfect as a Barbie doll and when you find an error you may call this the lens a bad one, is a flawed one. But the art of real quality testing that gives the lens a profile that is meaningful for photographers who look for real world assignments and not Barbie doll fantasies is slowly vanishing.